Anthropic is making it easier for developers to leverage best practices of prompt engineering by adding a feature for improving prompts and allowing example responses to be managed within the Anthropic Console.

According to Anthropic, while prompt quality is important, it can be time-consuming to implement best practices, and those best practices might also vary between different model providers. With this new prompt improver feature, Anthropic is giving developers the ability to take existing prompts — either new ones or previous prompts written for other models — and refine them using Claude.

The prompt improver uses a variety of methods to improve prompts, such as chain-of-thought reasoning, which adds a dedicated section where Claude can systematically think through prompts before responding; example standardization, where examples are converted into XML format for overall consistency; example enrichment, where existing examples are augmented using chain-of-thought reasoning; rewriting of prompts to correct grammatical issues; and prefill addition, where the Assistant message is prefilled to direct Claude’s actions and enforce a certain output format.

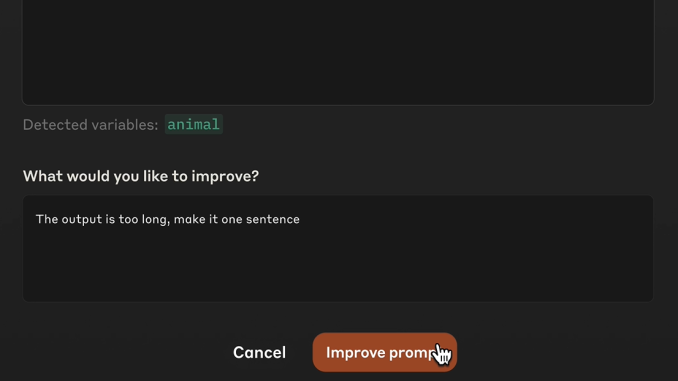

Then, once Claude generates the new prompt, the user can also provide feedback about what specifically works or doesn’t work, which improves the prompt even further.

Anthropic’s early testing has shown the prompt improver increasing accuracy by 30% on a multi-label classification task and bringing word count adherence to 100% on a summarization task.

In addition, developers can now manage output examples in the Workbench, which is another way that response quality can be improved. “This makes it easier to add new examples with clear input/output pairs or edit existing examples to refine response quality,” Anthropic wrote in a post.

Developers can also use the prompt evaluator to determine how the improved prompt performs under different scenarios. The company has now added an “ideal output” column in the Evaluations tabs to help developers assess outputs on a 5-point scale.

“These features make it easier to leverage prompt engineering best practices and build more reliable AI applications,” Anthropic wrote.