At its annual user conference, swampUp, the DevOps company JFrog announced new solutions and integrations with companies like GitHub and NVIDIA to enable developers to improve their DevSecOps capabilities and bring LLMs to production quickly and safely.

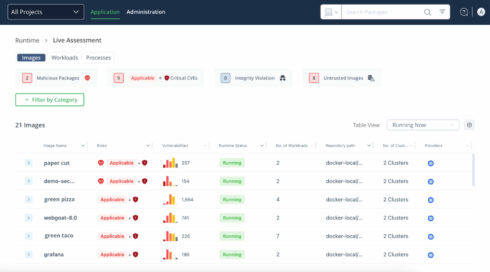

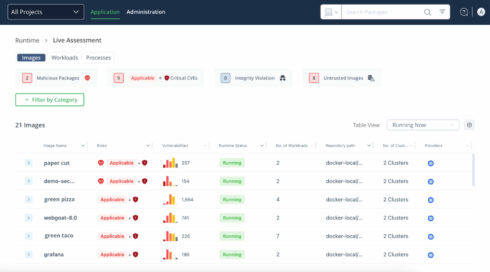

JFrog Runtime is a new security solution that enables developers to discover vulnerabilities in runtime environments. It monitors Kubernetes clusters in real time to identify, prioritize, and remediate security incidents based on their risk.

It provides developers with a method to track and manage packages, organize repositories by environment types, and activate JFrog Xray policies. Other benefits include centralized incident awareness, comprehensive analytics for workloads and containers, and continuous monitoring of post-deployment threats like malware or privilege escalation.

“By empowering DevOps, Data Scientists, and Platform engineers with an integrated solution that spans from secure model scanning and curation on the left to JFrog Runtime on the right, organizations can significantly enhance the delivery of trusted software at scale,” said Asaf Karas, CTO of JFrog Security.

Next, the company announced an expansion to its partnership with GitHub. New integrations will provide developers with better visibility into project status and security posture, allowing them to address potential issues more rapidly.

JFrog customers now get access to GitHub’s Copilot chat extension, which can help them select software packages that have already been updated, approved by the organization, and safe for use.

It also provides a unified view of security scan results from GitHub Advanced Security and JFrog Advanced Security, a job summary page that shows the health and security status of GitHub Actions Workflows, and dynamic project mapping and authentication.

Finally, the company announced a partnership with NVIDIA, integrating NVIDIA NIM microservices with the JFrog Platform and JFrog Artifactory model registry.

According to JFrog, this integration will “combine GPU-optimized, pre-approved AI models with centralized DevSecOps processes in an end-to-end software supply chain workflow.” The end result will be that developers can bring LLMs to production quickly while also maintaining transparency, traceability, and trust.

Benefits include unified management of NIM containers alongside other assets, continuous scanning, accelerated computing through NVIDIA’s infrastructure, and flexible deployment options with JFrog Artifactory.

“As enterprises scale their generative AI deployments, a central repository can help them rapidly select and deploy models that are approved for development,” said Pat Lee, vice president of enterprise strategic partnerships at NVIDIA. “The integration of NVIDIA NIM microservices into the JFrog Platform can help developers quickly get fully compliant, performance-optimized models quickly running in production.”