Anthropic’s developer Console now allows developers to generate, test, and evaluate AI prompts, allowing them to ultimately improve response quality.

Claude 3.5 Sonnet introduced a built-in prompt generator that allows a user to describe a task and have Claude convert it into a high-quality prompt. For example, they could describe that they need to triage support requests to Tier 1, 2, or 3 support or page an on-call engineer, and write “Please write a prompt that reviews inbound messages, then proposes a triage decision along with a separate one sentence justification.” Claude then takes that information to create a prompt for the task.

Now the company has added a new test case generation feature that can generate input variables for a prompt, such as an example inbound customer support message. Then users can run the prompt to see Claude’s response to the input.

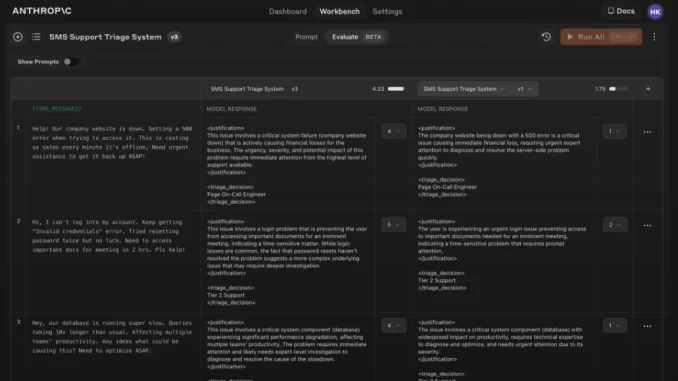

And finally, the new Evaluate feature allows users to test prompts using multiple inputs directly within the Console. Test cases can be manually added, imported from a CSV, or generated by Claude. These test cases can also be modified once they are in the Console, and all test cases can be run from a single click.

Once tests have been run, users can iterate on them by creating new versions of the prompt and running the test suite again. In addition, users will be able to do a side-by-side comparison of two or more prompts, and subject matter experts can rate response quality on a scale of 1-5 to help users understand if their changes have improved response quality.

“When building AI-powered applications, prompt quality significantly impacts results. But crafting high quality prompts is challenging, requiring deep knowledge of your application’s needs and expertise with large language models. To speed up development and improve outcomes, we’ve streamlined this process to make it easier for users to produce high quality prompts,” Anthropic wrote in a blog post.

You may also like…

Anthropic updates Claude with new features to improve collaboration

Anthropic’s Claude gains ability to use external tools and APIs