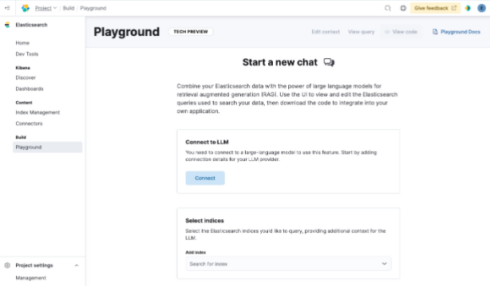

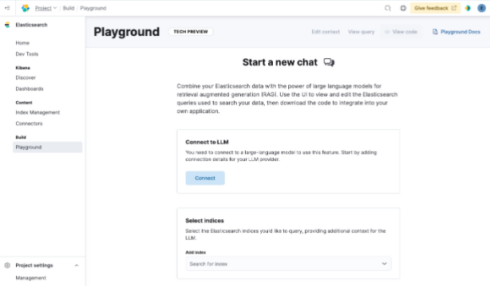

Elastic has just released a new tool called Playground that will enable users to experiment with retrieval-augmented generation (RAG) more easily.

RAG is a practice in which local data is added to an LLM, such as private company data or data that is more up-to-date than the LLMs training set. This allows it to give more accurate responses and reduces the occurrence of hallucinations.

Playground offers a low-code interface for adding data to an LLM for RAG implementations. They can use any data stored in an Elasticsearch index for this.

It also allows developers to A/B test LLMs from different model providers to see what suits their needs best.

The platform can utilize transformer models in Elasticsearch and also makes use of the Elasticsearch Open Inference API that integrates with inference providers, such as Cohere and Azure AI Studio.

“While prototyping conversational search, the ability to experiment with and rapidly iterate on key components of a RAG workflow is essential to get accurate and hallucination-free responses from LLMs,” said Matt Riley, global vice president and general manager of Search at Elastic. “Developers use the Elastic Search AI platform, which includes the Elasticsearch vector database, for comprehensive hybrid search capabilities and to tap into innovation from a growing list of LLM providers. Now, the playground experience brings these capabilities together via an intuitive user interface, removing the complexity from building and iterating on generative AI experiences, ultimately accelerating time to market for our customers.”

You may also like…

RAG is the next exciting advancement for LLMs

Forrester shares its top 10 emerging technology trends for 2024