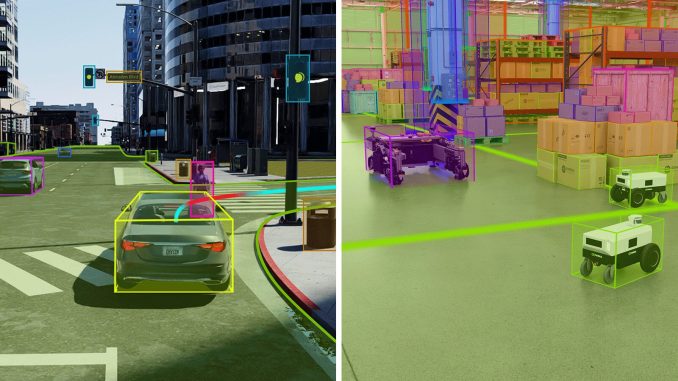

NVIDIA has announced a new solution, NVIDIA Omniverse Cloud Sensor RTX, that can simulate physical sensors so that developers can work on software for autonomous machines, such as vehicles, humanoids, industrial manipulators, mobile robots and more, in test environments.

The new offering enables developers to test sensor perception and other related AI software in a realistic test environment before they deploy their solutions in the real world.

It can be used to simulate different activities, like whether a robotic arm is operating correctly, an airport luggage carousel is running, a tree branch is blocking the road, a factory conveyor belt is in motion, or a person is nearby.

“Developing safe and reliable autonomous machines powered by generative physical AI requires training and testing in physically based virtual worlds,” said Rev Lebaredian, vice president of Omniverse and simulation technology at NVIDIA. “NVIDIA Omniverse Cloud Sensor RTX microservices will enable developers to easily build large-scale digital twins of factories, cities and even Earth — helping accelerate the next wave of AI.”

The new offering is built on the OpenUSD framework and it utilizes NVIDIA RTX ray tracing and neural-rendering technologies.

Early access to Omniverse Cloud Sensor RTX will be available later this year, and developers can sign up here. NVIDIA has given access to only two companies so far: Foretellix and MathWorks.

You may also like…